Probability

Probability

Jump to year

Paper 2, Section I, D

A coin has probability of landing heads. Let be the probability that the number of heads after tosses is even. Give an expression for in terms of . Hence, or otherwise, find .

Paper 2, Section I, F

Let be a continuous random variable taking values in . Let the probability density function of be

where is a constant.

Find the value of and calculate the mean, variance and median of .

[Recall that the median of is the number such that

Paper 2, Section II, 10E

(a) Alanya repeatedly rolls a fair six-sided die. What is the probability that the first number she rolls is a 1 , given that she rolls a 1 before she rolls a

(b) Let be a simple symmetric random walk on the integers starting at , that is,

where is a sequence of IID random variables with . Let be the time that the walk first hits 0 .

(i) Let be a positive integer. For , calculate the probability that the walk hits 0 before it hits .

(ii) Let and let be the event that the walk hits 0 before it hits 3 . Find . Hence find .

(iii) Let and let be the event that the walk hits 0 before it hits 4 . Find .

Paper 2, Section II, 12F

State and prove Chebyshev's inequality.

Let be a sequence of independent, identically distributed random variables such that

for some , and let be a continuous function.

(i) Prove that

is a polynomial function of , for any natural number .

(ii) Let . Prove that

where is the set of natural numbers such that .

(iii) Show that

as . [You may use without proof that, for any , there is a such that for all with .]

Paper 2, Section II, 9E

(a) (i) Define the conditional probability of the event given the event . Let be a partition of the sample space such that for all . Show that, if ,

(ii) There are urns, the th of which contains red balls and blue balls. Alice picks an urn (uniformly) at random and removes two balls without replacement. Find the probability that the first ball is blue, and the conditional probability that the second ball is blue, given that the first is blue. [You may assume, if you wish, that .]

(b) (i) What is meant by saying that two events and are independent? Two fair (6-sided) dice are rolled. Let be the event that the sum of the numbers shown is , and let be the event that the first die shows . For what values of and are the two events and independent?

(ii) The casino at Monte Corona features the following game: three coins each show heads with probability and tails otherwise. The first counts 10 points for a head and 2 for a tail; the second counts 4 points for both a head and a tail; and the third counts 3 points for a head and 20 for a tail. You and your opponent each choose a coin. You cannot both choose the same coin. Each of you tosses your coin once and the person with the larger score wins the jackpot. Would you prefer to be the first or the second to choose a coin?

Paper 2, Section II, D

Let be the disc of radius 1 with centre at the origin . Let be a random point uniformly distributed in . Let be the polar coordinates of . Show that and are independent and find their probability density functions and .

Let and be three random points selected independently and uniformly in . Find the expected area of triangle and hence find the probability that lies in the interior of triangle .

Find the probability that and are the vertices of a convex quadrilateral.

Paper 1, Section I, F

A robot factory begins with a single generation-0 robot. Each generation- robot independently builds some number of generation- robots before breaking down. The number of generation- robots built by a generation- robot is or 3 with probabilities and respectively. Find the expectation of the total number of generation- robots produced by the factory. What is the probability that the factory continues producing robots forever?

[Standard results about branching processes may be used without proof as long as they are carefully stated.]

Paper 1, Section II, F

(a) Let be a random variable. Write down the probability density function (pdf) of , and verify that it is indeed a pdf. Find the moment generating function (mgf) of and hence, or otherwise, verify that has mean 0 and variance 1 .

(b) Let be a sequence of IID random variables. Let and let . Find the distribution of .

(c) Let . Find the mean and variance of . Let and let .

If is a sequence of random variables and is a random variable, what does it mean to say that in distribution? State carefully the continuity theorem and use it to show that in distribution.

[You may not assume the central limit theorem.]

Paper 1, Section II, F

Let be events in some probability space. State and prove the inclusion-exclusion formula for the probability . Show also that

Suppose now that and that whenever we have . Show that there is a constant independent of such that .

Paper 2, Section I, 3F

(a) Prove that as .

(b) State Stirling's approximation for !.

(c) A school party of boys and girls travel on a red bus and a green bus. Each bus can hold children. The children are distributed at random between the buses.

Let be the event that the boys all travel on the red bus and the girls all travel on the green bus. Show that

Paper 2, Section I, F

Let and be independent exponential random variables each with parameter 1 . Write down the joint density function of and .

Let and . Find the joint density function of and .

Are and independent? Briefly justify your answer.

Paper 2, Section II, F

Let be events in some probability space. Let be the number of that occur (so is a random variable). Show that

and

[Hint: Write where .]

A collection of lightbulbs are arranged in a circle. Each bulb is on independently with probability . Let be the number of bulbs such that both that bulb and the next bulb clockwise are on. Find and .

Let be the event that there is at least one pair of adjacent bulbs that are both on.

Use Markov's inequality to show that if then as .

Use Chebychev's inequality to show that if then as .

Paper 2, Section II, F

Recall that a random variable in is bivariate normal or Gaussian if is normal for all . Let be bivariate normal.

(a) (i) Show that if is a real matrix then is bivariate normal.

(ii) Let and . Find the moment generating function of and deduce that the distribution of a bivariate normal random variable is uniquely determined by and .

(iii) Let and for . Let be the correlation of and . Write down in terms of some or all of and . If , why must and be independent?

For each , find . Hence show that for some normal random variable in that is independent of and some that should be specified.

(b) A certain species of East Anglian goblin has left arm of mean length with standard deviation , and right arm of mean length with standard deviation . The correlation of left- and right-arm-length of a goblin is . You may assume that the distribution of left- and right-arm-lengths can be modelled by a bivariate normal distribution. What is the probability that a randomly selected goblin has longer right arm than left arm?

[You may give your answer in terms of the distribution function of a random variable . That is, .J

Paper 2, Section II, F

Let and be positive integers with and let be a real number. A random walk on the integers starts at . At each step, the walk moves up 1 with probability and down 1 with probability . Find, with proof, the probability that the walk hits before it hits 0 .

Patricia owes a very large sum !) of money to a member of a violent criminal gang. She must return the money this evening to avoid terrible consequences but she only has !. She goes to a casino and plays a game with the probability of her winning being . If she bets on the game and wins then her is returned along with a further ; if she loses then her is lost.

The rules of the casino allow Patricia to play the game repeatedly until she runs out of money. She may choose the amount that she bets to be any integer a with , but it must be the same amount each time. What choice of would be best and why?

What choice of would be best, and why, if instead the probability of her winning the game is ?

Paper 2, Section II, F

(a) State the axioms that must be satisfied by a probability measure on a probability space .

Let and be events with . Define the conditional probability .

Let be pairwise disjoint events with for all and . Starting from the axioms, show that

and deduce Bayes' theorem.

(b) Two identical urns contain white balls and black balls. Urn I contains 45 white balls and 30 black balls. Urn II contains 12 white balls and 36 black balls. You do not know which urn is which.

(i) Suppose you select an urn and draw one ball at random from it. The ball is white. What is the probability that you selected Urn I?

(ii) Suppose instead you draw one ball at random from each urn. One of the balls is white and one is black. What is the probability that the white ball came from Urn I?

(c) Now suppose there are identical urns containing white balls and black balls, and again you do not know which urn is which. Each urn contains 1 white ball. The th urn contains black balls . You select an urn and draw one ball at random from it. The ball is white. Let be the probability that if you replace this ball and again draw a ball at random from the same urn then the ball drawn on the second occasion is also white. Show that as

Paper 2, Section I, F

(a) State the Cauchy-Schwarz inequality and Markov's inequality. State and prove Jensen's inequality.

(b) For a discrete random variable , show that implies that is constant, i.e. there is such that .

Paper 2, Section I, F

Let and be independent Poisson random variables with parameters and respectively.

(i) Show that is Poisson with parameter .

(ii) Show that the conditional distribution of given is binomial, and find its parameters.

Paper 2, Section II, 10F

(a) Let and be independent random variables taking values , each with probability , and let . Show that and are pairwise independent. Are they independent?

(b) Let and be discrete random variables with mean 0 , variance 1 , covariance . Show that .

(c) Let be discrete random variables. Writing , show that .

Paper 2, Section II, F

For a symmetric simple random walk on starting at 0 , let .

(i) For and , show that

(ii) For , show that and that

(iii) Prove that .

Paper 2, Section II, F

(a) Consider a Galton-Watson process . Prove that the extinction probability is the smallest non-negative solution of the equation where . [You should prove any properties of Galton-Watson processes that you use.]

In the case of a Galton-Watson process with

find the mean population size and compute the extinction probability.

(b) For each , let be a random variable with distribution . Show that

in distribution, where is a standard normal random variable.

Deduce that

Paper 2, Section II, F

(a) Let and be independent discrete random variables taking values in sets and respectively, and let be a function.

Let . Show that

Let . Show that

(b) Let be independent Bernoulli random variables. For any function , show that

Let denote the set of all sequences of length . By induction, or otherwise, show that for any function ,

where and .

Paper 2, Section I, F

Let and be real-valued random variables with joint density function

(i) Find the conditional probability density function of given .

(ii) Find the expectation of given .

Paper 2, Section I, F

Let be a non-negative integer-valued random variable such that .

Prove that

[You may use any standard inequality.]

Paper 2, Section II, 10F

(a) For any random variable and and , show that

For a standard normal random variable , compute and deduce that

(b) Let . For independent random variables and with distributions and , respectively, compute the probability density functions of and .

Paper 2, Section II, 12F

(a) Let . For , let be the first time at which a simple symmetric random walk on with initial position at time 0 hits 0 or . Show . [If you use a recursion relation, you do not need to prove that its solution is unique.]

(b) Let be a simple symmetric random walk on starting at 0 at time . For , let be the first time at which has visited distinct vertices. In particular, . Show for . [You may use without proof that, conditional on , the random variables have the distribution of a simple symmetric random walk starting at .]

(c) For , let be the circle graph consisting of vertices and edges between and where is identified with 0 . Let be a simple random walk on starting at time 0 from 0 . Thus and conditional on the random variable is with equal probability (identifying with ).

The cover time of the simple random walk on is the first time at which the random walk has visited all vertices. Show that .

Paper 2, Section II, F

Let . The Curie-Weiss Model of ferromagnetism is the probability distribution defined as follows. For , define random variables with values in such that the probabilities are given by

where is the normalisation constant

(a) Show that for any .

(b) Show that . [You may use for all without proof. ]

(c) Let . Show that takes values in , and that for each the number of possible values of such that is

Find for any .

Paper 2, Section II, F

For a positive integer , and , let

(a) For fixed and , show that is a probability mass function on and that the corresponding probability distribution has mean and variance .

(b) Let . Show that, for any ,

Show that the right-hand side of is a probability mass function on .

(c) Let and let with . For all , find integers and such that

[You may use the Central Limit Theorem.]

Paper 2, Section I,

Define the moment-generating function of a random variable . Let be independent and identically distributed random variables with distribution , and let . For , show that

Paper 2, Section I, F

Let be independent random variables, all with uniform distribution on . What is the probability of the event ?

Paper 2, Section II, F

A random graph with nodes is drawn by placing an edge with probability between and for all distinct and , independently. A triangle is a set of three distinct nodes that are all connected: there are edges between and , between and and between and .

(a) Let be the number of triangles in this random graph. Compute the maximum value and the expectation of .

(b) State the Markov inequality. Show that if , for some , then when

(c) State the Chebyshev inequality. Show that if is such that when , then when

Paper 2, Section II, F

Let be a non-negative random variable such that is finite, and let .

(a) Show that

(b) Let and be random variables such that and are finite. State and prove the Cauchy-Schwarz inequality for these two variables.

(c) Show that

Paper 2, Section II, F

We randomly place balls in bins independently and uniformly. For each with , let be the number of balls in bin .

(a) What is the distribution of ? For , are and independent?

(b) Let be the number of empty bins, the number of bins with two or more balls, and the number of bins with exactly one ball. What are the expectations of and ?

(c) Let , for an integer . What is ? What is the limit of when ?

(d) Instead, let , for an integer . What is ? What is the limit of when ?

Paper 2, Section II, F

For any positive integer and positive real number , the Gamma distribution has density defined on by

For any positive integers and , the Beta distribution has density defined on by

Let and be independent random variables with respective distributions and . Show that the random variables and are independent and give their distributions.

Paper 2, Section I, F

Let be events in the sample space such that and . The event is said to attract if the conditional probability is greater than , otherwise it is said that repels . Show that if attracts , then attracts . Does repel

Paper 2, Section I, F

Let be a uniform random variable on , and let .

(a) Find the distribution of the random variable .

(b) Define a new random variable as follows: suppose a fair coin is tossed, and if it lands heads we set whereas if it lands tails we set . Find the probability density function of .

Paper 2, Section II, F

When coin is tossed it comes up heads with probability , whereas coin comes up heads with probability . Suppose one of these coins is randomly chosen and is tossed twice. If both tosses come up heads, what is the probability that coin was tossed? Justify your answer.

In each draw of a lottery, an integer is picked independently at random from the first integers , with replacement. What is the probability that in a sample of successive draws the numbers are drawn in a non-decreasing sequence? Justify your answer.

Paper 2, Section II, F

State and prove Markov's inequality and Chebyshev's inequality, and deduce the weak law of large numbers.

If is a random variable with mean zero and finite variance , prove that for any ,

[Hint: Show first that for every .]

Paper 2, Section II, F

Consider the function

Show that defines a probability density function. If a random variable has probability density function , find the moment generating function of , and find all moments , .

Now define

Show that for every ,

Paper 2, Section II, F

Lionel and Cristiana have and million pounds, respectively, where . They play a series of independent football games in each of which the winner receives one million pounds from the loser (a draw cannot occur). They stop when one player has lost his or her entire fortune. Lionel wins each game with probability and Cristiana wins with probability , where . Find the expected number of games before they stop playing.

Paper 2, Section I, F

Consider independent discrete random variables and assume exists for all .

Show that

If the are also positive, show that

Paper 2, Section I, F

Consider a particle situated at the origin of . At successive times a direction is chosen independently by picking an angle uniformly at random in the interval , and the particle then moves an Euclidean unit length in this direction. Find the expected squared Euclidean distance of the particle from the origin after such movements.

Paper 2, Section II, 9F

State the axioms of probability.

State and prove Boole's inequality.

Suppose you toss a sequence of coins, the -th of which comes up heads with probability , where . Calculate the probability of the event that infinitely many heads occur.

Suppose you repeatedly and independently roll a pair of fair dice and each time record the sum of the dice. What is the probability that an outcome of 5 appears before an outcome of 7 ? Justify your answer.

Paper 2, Section II, F

Give the definition of an exponential random variable with parameter . Show that is memoryless.

Now let be independent exponential random variables, each with parameter . Find the probability density function of the random variable and the probability .

Suppose the random variables are independent and each has probability density function given by

Find the probability density function of [You may use standard results without proof provided they are clearly stated.]

Paper 2, Section II, F

For any function and random variables , the "tower property" of conditional expectations is

Provide a proof of this property when both are discrete.

Let be a sequence of independent uniform -random variables. For find the expected number of 's needed such that their sum exceeds , that is, find where

[Hint: Write

Paper 2, Section II, F

Define what it means for a random variable to have a Poisson distribution, and find its moment generating function.

Suppose are independent Poisson random variables with parameters . Find the distribution of .

If are independent Poisson random variables with parameter , find the distribution of . Hence or otherwise, find the limit of the real sequence

[Standard results may be used without proof provided they are clearly stated.]

Paper 2, Section I, F

(i) Let be a random variable. Use Markov's inequality to show that

for all and real .

(ii) Calculate in the case where is a Poisson random variable with parameter . Using the inequality from part (i) with a suitable choice of , prove that

for all .

Paper 2, Section I, F

Let be a random variable with mean and variance . Let

Show that for all . For what value of is there equality?

Let

Supposing that has probability density function , express in terms of . Show that is minimised when is such that .

Paper 2, Section II, F

Let be the sample space of a probabilistic experiment, and suppose that the sets are a partition of into events of positive probability. Show that

for any event of positive probability.

A drawer contains two coins. One is an unbiased coin, which when tossed, is equally likely to turn up heads or tails. The other is a biased coin, which will turn up heads with probability and tails with probability . One coin is selected (uniformly) at random from the drawer. Two experiments are performed:

(a) The selected coin is tossed times. Given that the coin turns up heads times and tails times, what is the probability that the coin is biased?

(b) The selected coin is tossed repeatedly until it turns up heads times. Given that the coin is tossed times in total, what is the probability that the coin is biased?

Paper 2, Section II, F

Let be a geometric random variable with . Derive formulae for and in terms of

A jar contains balls. Initially, all of the balls are red. Every minute, a ball is drawn at random from the jar, and then replaced with a green ball. Let be the number of minutes until the jar contains only green balls. Show that the expected value of is . What is the variance of

Paper 2, Section II, F

Let be a random variable taking values in the non-negative integers, and let be the probability generating function of . Assuming is everywhere finite, show that

where is the mean of and is its variance. [You may interchange differentiation and expectation without justification.]

Consider a branching process where individuals produce independent random numbers of offspring with the same distribution as . Let be the number of individuals in the -th generation, and let be the probability generating function of . Explain carefully why

Assuming , compute the mean of . Show that

Suppose and . Compute the probability that the population will eventually become extinct. You may use standard results on branching processes as long as they are clearly stated.

Paper 2, Section II, F

Let be an exponential random variable with parameter . Show that

for any .

Let be the greatest integer less than or equal to . What is the probability mass function of ? Show that .

Let be the fractional part of . What is the density of ?

Show that and are independent.

Paper 2, Section I, F

Define the probability generating function of a random variable taking values in the non-negative integers.

A coin shows heads with probability on each toss. Let be the number of tosses up to and including the first appearance of heads, and let . Find the probability generating function of .

Show that where .

Paper 2, Section I, F

Given two events and with and , define the conditional probability .

Show that

A random number of fair coins are tossed, and the total number of heads is denoted by . If for , find .

Paper 2, Section II, F

Let be independent random variables with distribution functions . Show that have distribution functions

Now let be independent random variables, each having the exponential distribution with parameter 1. Show that has the exponential distribution with parameter 2 , and that is independent of .

Hence or otherwise show that has the same distribution as , and deduce the mean and variance of .

[You may use without proof that has mean 1 and variance 1.]

Paper 2, Section II, F

(i) Let be the size of the generation of a branching process with familysize probability generating function , and let . Show that the probability generating function of satisfies for .

(ii) Suppose the family-size mass function is Find , and show that

Deduce the value of .

(iii) Write down the moment generating function of . Hence or otherwise show that, for ,

[You may use the continuity theorem but, if so, should give a clear statement of it.]

Paper 2, Section II, F

(i) Define the distribution function of a random variable , and also its density function assuming is differentiable. Show that

(ii) Let be independent random variables each with the uniform distribution on . Show that

What is the probability that the random quadratic equation has real roots?

Given that the two roots of the above quadratic are real, what is the probability that both and

Paper 2, Section II, F

(i) Define the moment generating function of a random variable . If are independent and , show that the moment generating function of is .

(ii) Assume , and for . Explain the expansion

where and [You may assume the validity of interchanging expectation and differentiation.]

(iii) Let be independent, identically distributed random variables with mean 0 and variance 1 , and assume their moment generating function satisfies the condition of part (ii) with .

Suppose that and are independent. Show that , and deduce that satisfies .

Show that as , and deduce that for all .

Show that and are normally distributed.

Paper 2, Section I, F

What does it mean to say that events are (i) pairwise independent, (ii) independent?

Consider pairwise disjoint events and , with

Let . Prove that the events and are pairwise independent if and only if

Prove or disprove that there exist and such that these three events are independent.

Paper 2, Section I, F

Let be a random variable taking non-negative integer values and let be a random variable taking real values.

(a) Define the probability-generating function . Calculate it explicitly for a Poisson random variable with mean .

(b) Define the moment-generating function . Calculate it explicitly for a normal random variable .

(c) By considering a random sum of independent copies of , prove that, for general and is the moment-generating function of some random variable.

Paper 2, Section II, F

A circular island has a volcano at its central point. During an eruption, lava flows from the mouth of the volcano and covers a sector with random angle (measured in radians), whose line of symmetry makes a random angle with some fixed compass bearing.

The variables and are independent. The probability density function of is constant on and the probability density function of is of the form where , and is a constant.

(a) Find the value of . Calculate the expected value and the variance of the sector angle . Explain briefly how you would simulate the random variable using a uniformly distributed random variable .

(b) and are two houses on the island which are collinear with the mouth of the volcano, but on different sides of it. Find

(i) the probability that is hit by the lava;

(ii) the probability that both and are hit by the lava;

(iii) the probability that is not hit by the lava given that is hit.

Paper 2, Section II, F

I was given a clockwork orange for my birthday. Initially, I place it at the centre of my dining table, which happens to be exactly 20 units long. One minute after I place it on the table it moves one unit towards the left end of the table or one unit towards the right, each with probability 1/2. It continues in this manner at one minute intervals, with the direction of each move being independent of what has gone before, until it reaches either end of the table where it promptly falls off. If it falls off the left end it will break my Ming vase. If it falls off the right end it will land in a bucket of sand leaving the vase intact.

(a) Derive the difference equation for the probability that the Ming vase will survive, in terms of the current distance from the orange to the left end, where .

(b) Derive the corresponding difference equation for the expected time when the orange falls off the table.

(c) Write down the general formula for the solution of each of the difference equations from (a) and (b). [No proof is required.]

(d) Based on parts (a)-(c), calculate the probability that the Ming vase will survive if, instead of placing the orange at the centre of the table, I place it initially 3 units from the right end of the table. Calculate the expected time until the orange falls off.

(e) Suppose I place the orange 3 units from the left end of the table. Calculate the probability that the orange will fall off the right end before it reaches a distance 1 unit from the left end of the table.

Paper 2, Section II, F

(a) State Markov's inequality.

(b) Let be a given positive integer. You toss an unbiased coin repeatedly until the first head appears, which occurs on the th toss. Next, I toss the same coin until I get my first tail, which occurs on my th toss. Then you continue until you get your second head with a further tosses; then I continue with a further tosses until my second tail. We continue for turns like this, and generate a sequence , of random variables. The total number of tosses made is . (For example, for , a sequence of outcomes gives and .)

Find the probability-generating functions of the random variables and . Hence or otherwise obtain the mean values and .

Obtain the probability-generating function of the random variable , and find the mean value .

Prove that, for ,

For , calculate , and confirm that it satisfies Markov's inequality.

Paper 2, Section II, F

(a) Let be pairwise disjoint events such that their union gives the whole set of outcomes, with for . Prove that for any event with and for any

(b) A prince is equally likely to sleep on any number of mattresses from six to eight; on half the nights a pea is placed beneath the lowest mattress. With only six mattresses his sleep is always disturbed by the presence of a pea; with seven a pea, if present, is unnoticed in one night out of five; and with eight his sleep is undisturbed despite an offending pea in two nights out of five.

What is the probability that, on a given night, the prince's sleep was undisturbed?

On the morning of his wedding day, he announces that he has just spent the most peaceful and undisturbed of nights. What is the expected number of mattresses on which he slept the previous night?

Paper 2, Section I, F

Let and be two non-constant random variables with finite variances. The correlation coefficient is defined by

(a) Using the Cauchy-Schwarz inequality or otherwise, prove that

(b) What can be said about the relationship between and when either (i) or (ii) . [Proofs are not required.]

(c) Take and let be independent random variables taking values with probabilities . Set

Find .

Paper 2, Section I, F

Jensen's inequality states that for a convex function and a random variable with a finite mean, .

(a) Suppose that where is a positive integer, and is a random variable taking values with equal probabilities, and where the sum . Deduce from Jensen's inequality that

(b) horses take part in races. The results of different races are independent. The probability for horse to win any given race is , with .

Let be the probability that a single horse wins all races. Express as a polynomial of degree in the variables .

By using (1) or otherwise, prove that .

Paper 2, Section II, F

Let be bivariate normal random variables, with the joint probability density function

where

and .

(a) Deduce that the marginal probability density function

(b) Write down the moment-generating function of in terms of and proofs are required.]

(c) By considering the ratio prove that, conditional on , the distribution of is normal, with mean and variance and , respectively.

Paper 2, Section II, F

In a branching process every individual has probability of producing exactly offspring, , and the individuals of each generation produce offspring independently of each other and of individuals in preceding generations. Let represent the size of the th generation. Assume that and and let be the generating function of . Thus

(a) Prove that

(b) State a result in terms of about the probability of eventual extinction. [No proofs are required.]

(c) Suppose the probability that an individual leaves descendants in the next generation is , for . Show from the result you state in (b) that extinction is certain. Prove further that in this case

and deduce the probability that the th generation is empty.

Paper 2, Section II, F

The yearly levels of water in the river Camse are independent random variables , with a given continuous distribution function and . The levels have been observed in years and their values recorded. The local council has decided to construct a dam of height

Let be the subsequent time that elapses before the dam overflows:

(a) Find the distribution function , and show that the mean value

(b) Express the conditional probability , where and , in terms of .

(c) Show that the unconditional probability

(d) Determine the mean value .

Paper 2, Section II, F

(a) What does it mean to say that a random variable with values has a geometric distribution with a parameter where ?

An expedition is sent to the Himalayas with the objective of catching a pair of wild yaks for breeding. Assume yaks are loners and roam about the Himalayas at random. The probability that a given trapped yak is male is independent of prior outcomes. Let be the number of yaks that must be caught until a breeding pair is obtained. (b) Find the expected value of . (c) Find the variance of .

Paper 2, Section , F

Prove the law of total probability: if are pairwise disjoint events with , and then .

There are people in a lecture room. Their birthdays are independent random variables, and each person's birthday is equally likely to be any of the 365 days of the year. By using the bound for , prove that if then the probability that at least two people have the same birthday is at least .

[In calculations, you may take .]

Paper 2, Section I, F

Consider a pair of jointly normal random variables , with mean values , , variances and correlation coefficient with .

(a) Write down the joint probability density function for .

(b) Prove that are independent if and only if .

Paper 2, Section II, F

Let and be three pairwise disjoint events such that the union is the full event and . Let be any event with . Prove the formula

A Royal Navy speedboat has intercepted an abandoned cargo of packets of the deadly narcotic spitamin. This sophisticated chemical can be manufactured in only three places in the world: a plant in Authoristan (A), a factory in Bolimbia (B) and the ultramodern laboratory on board of a pirate submarine Crash (C) cruising ocean waters. The investigators wish to determine where this particular cargo comes from, but in the absence of prior knowledge they have to assume that each of the possibilities A, B and C is equally likely.

It is known that a packet from A contains pure spitamin in of cases and is contaminated in of cases. For B the corresponding figures are and , and for they are and .

Analysis of the captured cargo showed that out of 10000 packets checked, 9800 contained the pure drug and the remaining 200 were contaminated. On the basis of this analysis, the Royal Navy captain estimated that of the packets contain pure spitamin and reported his opinion that with probability roughly the cargo was produced in B and with probability roughly it was produced in C.

Assume that the number of contaminated packets follows the binomial distribution where equals 5 for for and 1 for C. Prove that the captain's opinion is wrong: there is an overwhelming chance that the cargo comes from B.

[Hint: Let be the event that 200 out of 10000 packets are contaminated. Compare the ratios of the conditional probabilities and . You may find it helpful that and . You may also take .]

Paper 2, Section II, F

Let and be two independent uniformly distributed random variables on . Prove that and , and find , where is a non-negative integer.

Let be independent random points of the unit square . We say that is a maximal external point if, for each , either or . (For example, in the figure below there are three maximal external points.) Determine the expected number of maximal external points.

Paper 2, Section II, F

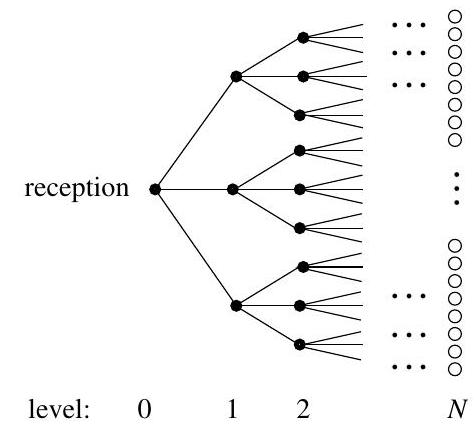

No-one in their right mind would wish to be a guest at the Virtual Reality Hotel. See the diagram below showing a part of the floor plan of the hotel where rooms are represented by black or white circles. The hotel is built in a shape of a tree: there is one room (reception) situated at level 0, three rooms at level 1 , nine at level 2 , and so on. The rooms are joined by corridors to their neighbours: each room has four neighbours, apart from the reception, which has three neighbours. Each corridor is blocked with probability and open for passage in both directions with probability , independently for different corridors. Every room at level , where is a given very large number, has an open window through which a guest can (and should) escape into the street. An arriving guest is placed in the reception and then wanders freely, insofar as the blocked corridors allow.

(a) Prove that the probability that the guest will not escape is close to a solution of the equation , where is a probability-generating function that you should specify.

(b) Hence show that the guest's chance of escape is approximately .

Paper 2, Section II, F

I throw two dice and record the scores and . Let be the sum and the difference .

(a) Suppose that the dice are fair, so the values are equally likely. Calculate the mean and variance of both and . Find all the values of and at which the probabilities are each either greatest or least. Determine whether the random variables and are independent.

(b) Now suppose that the dice are unfair, and that they give the values with probabilities and , respectively. Write down the values of 2), and . By comparing with and applying the arithmetic-mean-geometric-mean inequality, or otherwise, show that the probabilities cannot all be equal.

2.I.3F

There are socks in a drawer, three of which are red and the rest black. John chooses his socks by selecting two at random from the drawer and puts them on. He is three times more likely to wear socks of different colours than to wear matching red socks. Find .

For this value of , what is the probability that John wears matching black socks?

2.I.4F

A standard six-sided die is thrown. Calculate the mean and variance of the number shown.

The die is thrown times. By using Chebyshev's inequality, find an such that

where is the total of the numbers shown over the throws.

2.II.10F

and play a series of games. The games are independent, and each is won by with probability and by with probability . The players stop when the number of wins by one player is three greater than the number of wins by the other player. The player with the greater number of wins is then declared overall winner.

(i) Find the probability that exactly 5 games are played.

(ii) Find the probability that is the overall winner.

2.II.11F

Let and have the bivariate normal density function

for fixed . Let . Show that and are independent variables. Hence, or otherwise, determine

2.II.12F

The discrete random variable has distribution given by

where . Determine the mean and variance of .

A fair die is rolled until all 6 scores have occurred. Find the mean and standard deviation of the number of rolls required.

[Hint:

2.II.9F

A population evolves in generations. Let be the number of members in the th generation, with . Each member of the th generation gives birth to a family, possibly empty, of members of the th generation; the size of this family is a random variable and we assume that the family sizes of all individuals form a collection of independent identically distributed random variables each with generating function .

Let be the generating function of . State and prove a formula for in terms of . Determine the mean of in terms of the mean of .

Suppose that has a Poisson distribution with mean . Find an expression for in terms of , where is the probability that the population becomes extinct by the th generation.

Paper 2, Section I, F

Let be a normally distributed random variable with mean 0 and variance 1 . Define, and determine, the moment generating function of . Compute for .

Let be a normally distributed random variable with mean and variance . Determine the moment generating function of .

Paper 2, Section I, F

Let and be independent random variables, each uniformly distributed on . Let and . Show that , and hence find the covariance of and .

Paper 2, Section II, F

Let and be three random points on a sphere with centre . The positions of and are independent, and each is uniformly distributed over the surface of the sphere. Calculate the probability density function of the angle formed by the lines and .

Calculate the probability that all three of the angles and are acute. [Hint: Condition on the value of the angle .]

Paper 2, Section II, F

Let be events in a sample space. For each of the following statements, either prove the statement or provide a counterexample.

(i)

(ii)

(iii)

(iv) If is an event and if, for each is a pair of independent events, then is also a pair of independent events.

Paper 2, Section II, F

Let and be independent non-negative random variables, with densities and respectively. Find the joint density of and , where is a positive constant.

Let and be independent and exponentially distributed random variables, each with density

Find the density of . Is it the same as the density of the random variable

Paper 2, Section II, F

Let be a non-negative integer-valued random variable with

Define , and show that

Let be a sequence of independent and identically distributed continuous random variables. Let the random variable mark the point at which the sequence stops decreasing: that is, is such that

where, if there is no such finite value of , we set . Compute , and show that . Determine .

2.I.3F

What is a convex function? State Jensen's inequality for a convex function of a random variable which takes finitely many values.

Let . By using Jensen's inequality, or otherwise, find the smallest constant so that

[You may assume that is convex for .]

2.I.4F

Let be a fixed positive integer and a discrete random variable with values in . Define the probability generating function of . Express the mean of in terms of its probability generating function. The Dirichlet probability generating function of is defined as

Express the mean of and the mean of in terms of .

2.II.10F

Let be independent random variables with values in and the same probability density . Let . Compute the joint probability density of and the marginal densities of and respectively. Are and independent?

2.II.11F

A normal deck of playing cards contains 52 cards, four each with face values in the set . Suppose the deck is well shuffled so that each arrangement is equally likely. Write down the probability that the top and bottom cards have the same face value.

Consider the following algorithm for shuffling:

S1: Permute the deck randomly so that each arrangement is equally likely.

S2: If the top and bottom cards do not have the same face value, toss a biased coin that comes up heads with probability and go back to step if head turns up. Otherwise stop.

All coin tosses and all permutations are assumed to be independent. When the algorithm stops, let and denote the respective face values of the top and bottom cards and compute the probability that . Write down the probability that for some and the probability that for some . What value of will make and independent random variables? Justify your answer.

2.II.12F

Let and define

Find such that is a probability density function. Let be a sequence of independent, identically distributed random variables, each having with the correct choice of as probability density. Compute the probability density function of . [You may use the identity

valid for all and .]

Deduce the probability density function of

Explain why your result does not contradict the weak law of large numbers.

2.II.9F

Suppose that a population evolves in generations. Let be the number of members in the -th generation and . Each member of the -th generation gives birth to a family, possibly empty, of members of the -th generation; the size of this family is a random variable and we assume that the family sizes of all individuals form a collection of independent identically distributed random variables with the same generating function .

Let be the generating function of . State and prove a formula for in terms of . Use this to compute the variance of .

Now consider the total number of individuals in the first generations; this number is a random variable and we write for its generating function. Find a formula that expresses in terms of and .

2.I.3F

Suppose and is a positive real-valued random variable with probability density

for , where is a constant.

Find the constant and show that, if and ,

[You may assume the inequality for all .]

2.I.4F

Describe the Poisson distribution characterised by parameter . Calculate the mean and variance of this distribution in terms of .

Show that the sum of independent random variables, each having the Poisson distribution with , has a Poisson distribution with .

Use the central limit theorem to prove that

2.II

Given a real-valued random variable , we define by

Consider a second real-valued random variable , independent of . Show that

You gamble in a fair casino that offers you unlimited credit despite your initial wealth of 0 . At every game your wealth increases or decreases by with equal probability . Let denote your wealth after the game. For a fixed real number , compute defined by

Verify that the result is real-valued.

Show that for even,

for some constant , which you should determine. What is for odd?

2.II.10F

Alice and Bill fight a paint-ball duel. Nobody has been hit so far and they are both left with one shot. Being exhausted, they need to take a breath before firing their last shot. This takes seconds for Alice and seconds for Bill. Assume these times are exponential random variables with means and , respectively.

Find the distribution of the (random) time that passes by before the next shot is fired. What is its standard deviation? What is the probability that Alice fires the next shot?

Assume Alice has probability of hitting whenever she fires whereas Bill never misses his target. If the next shot is a hit, what is the probability that it was fired by Alice?

2.II.11F

Let be uniformly distributed on and define . Show that, conditionally on

the vector is uniformly distributed on the unit disc. Let denote the point in polar coordinates and find its probability density function for . Deduce that and are independent.

Introduce the new random variables

noting that under the above conditioning, are uniformly distributed on the unit disc. The pair may be viewed as a (random) point in with polar coordinates . Express as a function of and deduce its density. Find the joint density of . Hence deduce that and are independent normal random variables with zero mean and unit variance.

2.II.12F

Let be a ranking of the yearly rainfalls in Cambridge over the next years: assume is a random permutation of . Year is called a record year if for all (thus the first year is always a record year). Let if year is a record year and 0 otherwise.

Find the distribution of and show that are independent and calculate the mean and variance of the number of record years in the next years.

Find the probability that the second record year occurs at year . What is the expected number of years until the second record year occurs?

2.I.3F

Define the covariance, , of two random variables and .

Prove, or give a counterexample to, each of the following statements.

(a) For any random variables

(b) If and are identically distributed, not necessarily independent, random variables then

2.I.4F

The random variable has probability density function

Determine , and the mean and variance of .

2.II.10F

Define the conditional probability of the event given the event .

A bag contains four coins, each of which when tossed is equally likely to land on either of its two faces. One of the coins shows a head on each of its two sides, while each of the other three coins shows a head on only one side. A coin is chosen at random, and tossed three times in succession. If heads turn up each time, what is the probability that if the coin is tossed once more it will turn up heads again? Describe the sample space you use and explain carefully your calculations.

2.II.11F

The random variables and are independent, and each has an exponential distribution with parameter . Find the joint density function of

and show that and are independent. What is the density of ?

2.II.12F

Let be events such that for . Show that the number of events that occur satisfies

Planet Zog is a sphere with centre . A number of spaceships land at random on its surface, their positions being independent, each uniformly distributed over the surface. A spaceship at is in direct radio contact with another point on the surface if . Calculate the probability that every point on the surface of the planet is in direct radio contact with at least one of the spaceships.

[Hint: The intersection of the surface of a sphere with a plane through the centre of the sphere is called a great circle. You may find it helpful to use the fact that random great circles partition the surface of a sphere into disjoint regions with probability one.]

2.II.9F

Let be a positive-integer valued random variable. Define its probability generating function . Show that if and are independent positive-integer valued random variables, then .

A non-standard pair of dice is a pair of six-sided unbiased dice whose faces are numbered with strictly positive integers in a non-standard way (for example, ) and . Show that there exists a non-standard pair of dice and such that when thrown

total shown by and is total shown by pair of ordinary dice is

for all .

[Hint:

2.I.3F

(a) Define the probability generating function of a random variable. Calculate the probability generating function of a binomial random variable with parameters and , and use it to find the mean and variance of the random variable.

(b) is a binomial random variable with parameters and is a binomial random variable with parameters and , and and are independent. Find the distribution of ; that is, determine for all possible values of .

2.I.4F

The random variable is uniformly distributed on the interval . Find the distribution function and the probability density function of , where

2.II.10F

The random variables and each take values in , and their joint distribution is given by

Find necessary and sufficient conditions for and to be (i) uncorrelated; (ii) independent.

Are the conditions established in (i) and (ii) equivalent?

2.II.11F

A laboratory keeps a population of aphids. The probability of an aphid passing a day uneventfully is . Given that a day is not uneventful, there is probability that the aphid will have one offspring, probability that it will have two offspring and probability that it will die, where . Offspring are ready to reproduce the next day. The fates of different aphids are independent, as are the events of different days. The laboratory starts out with one aphid.

Let be the number of aphids at the end of the first day. What is the expected value of ? Determine an expression for the probability generating function of .

Show that the probability of extinction does not depend on , and that if then the aphids will certainly die out. Find the probability of extinction if and .

[Standard results on branching processes may be used without proof, provided that they are clearly stated.]

2.II.12F

Planet Zog is a ball with centre . Three spaceships and land at random on its surface, their positions being independent and each uniformly distributed on its surface. Calculate the probability density function of the angle formed by the lines and .

Spaceships and can communicate directly by radio if , and similarly for spaceships and and spaceships and . Given angle , calculate the probability that can communicate directly with either or . Given angle , calculate the probability that can communicate directly with both and . Hence, or otherwise, show that the probability that all three spaceships can keep in in touch (with, for example, communicating with via if necessary) is .

2.II.9F

State the inclusion-exclusion formula for the probability that at least one of the events occurs.

After a party the guests take coats randomly from a pile of their coats. Calculate the probability that no-one goes home with the correct coat.

Let be the probability that exactly guests go home with the correct coats. By relating to , or otherwise, determine and deduce that

2.I.3F

Define the indicator function of an event .

Let be the indicator function of the event , and let be the number of values of such that occurs. Show that where , and find in terms of the quantities .

Using Chebyshev's inequality or otherwise, show that

2.I.4F

A coin shows heads with probability on each toss. Let be the probability that the number of heads after tosses is even. Show carefully that , , and hence find . [The number 0 is even.]

2.II.10F

There is a random number of foreign objects in my soup, with mean and finite variance. Each object is a fly with probability , and otherwise is a spider; different objects have independent types. Let be the number of flies and the number of spiders.

(a) Show that denotes the probability generating function of a random variable . You should present a clear statement of any general result used.]

(b) Suppose has the Poisson distribution with parameter . Show that has the Poisson distribution with parameter , and that and are independent.

(c) Let and suppose that and are independent. [You are given nothing about the distribution of .] Show that . By working with the function or otherwise, deduce that has the Poisson distribution. [You may assume that as .]

2.II.11F

Let be independent random variables each with the uniform distribution on the interval .

(a) Show that has density function

(b) Show that .

(c) You are provided with three rods of respective lengths . Show that the probability that these rods may be used to form the sides of a triangle is .

(d) Find the density function of for . Let be uniformly distributed on , and independent of . Show that the probability that rods of lengths may be used to form the sides of a quadrilateral is .

2.II.12F

(a) Explain what is meant by the term 'branching process'.

(b) Let be the size of the th generation of a branching process in which each family size has probability generating function , and assume that . Show that the probability generating function of satisfies for .

(c) Show that is the probability generating function of a non-negative integer-valued random variable when , and find explicitly when is thus given.

(d) Find the probability that , and show that it converges as to . Explain carefully why this implies that the probability of ultimate extinction equals .

2.II.9F

(a) Define the conditional probability of the event given the event . Let be a partition of the sample space such that for all . Show that, if ,

(b) There are urns, the th of which contains red balls and blue balls. You pick an urn (uniformly) at random and remove two balls without replacement. Find the probability that the first ball is blue, and the conditional probability that the second ball is blue given that the first is blue. [You may assume that .]

(c) What is meant by saying that two events and are independent?

(d) Two fair dice are rolled. Let be the event that the sum of the numbers shown is , and let be the event that the first die shows . For what values of and are the two events independent?

I play tennis with my parents; the chances for me to win a game against are and against Dad , where . We agreed to have three games, and their order can be (where I play against Dad, then Mum then again Dad) or . The results of games are independent.

Calculate under each of the two orders the probabilities of the following events:

a) that I win at least one game,

b) that I win at least two games,

c) that I win at least two games in succession (i.e., games 1 and 2 or 2 and 3 , or 1 , 2 and 3,

d) that I win exactly two games in succession (i.e., games 1 and 2 or 2 and 3 , but not 1,2 and 3 ),

e) that I win exactly two games (i.e., 1 and 2 or 2 and 3 or 1 and 3 , but not 1,2 and 3.

In each case a)- e) determine which order of games maximizes the probability of the event. In case e) assume in addition that .

2.I.3F

The following problem is known as Bertrand's paradox. A chord has been chosen at random in a circle of radius . Find the probability that it is longer than the side of the equilateral triangle inscribed in the circle. Consider three different cases:

a) the middle point of the chord is distributed uniformly inside the circle,

b) the two endpoints of the chord are independent and uniformly distributed over the circumference,

c) the distance between the middle point of the chord and the centre of the circle is uniformly distributed over the interval .

[Hint: drawing diagrams may help considerably.]

2.I.4F

The Ruritanian authorities decided to pardon and release one out of three remaining inmates, and , kept in strict isolation in the notorious Alkazaf prison. The inmates know this, but can't guess who among them is the lucky one; the waiting is agonising. A sympathetic, but corrupted, prison guard approaches and offers to name, in exchange for a fee, another inmate (not who is doomed to stay. He says: "This reduces your chances to remain here from to : will it make you feel better?" hesitates but then accepts the offer; the guard names .

Assume that indeed will not be released. Determine the conditional probability

and thus check the guard's claim, in three cases:

a) when the guard is completely unbiased (i.e., names any of and with probability if the pair is to remain jailed),

b) if he hates and would certainly name him if is to remain jailed,

c) if he hates and would certainly name him if is to remain jailed.

2.II.10F

A random point is distributed uniformly in a unit circle so that the probability that it falls within a subset is proportional to the area of . Let denote the distance between the point and the centre of the circle. Find the distribution function , the expected value and the variance .

Let be the angle formed by the radius through the random point and the horizontal line. Prove that and are independent random variables.

Consider a coordinate system where the origin is placed at the centre of . Let and denote the horizontal and vertical coordinates of the random point. Find the covariance and determine whether and are independent.

Calculate the sum of expected values . Show that it can be written as the expected value and determine the random variable .

2.II.11F

Dipkomsky, a desperado in the wild West, is surrounded by an enemy gang and fighting tooth and nail for his survival. He has guns, , pointing in different directions and tries to use them in succession to give an impression that there are several defenders. When he turns to a subsequent gun and discovers that the gun is loaded he fires it with probability and moves to the next one. Otherwise, i.e. when the gun is unloaded, he loads it with probability or simply moves to the next gun with complementary probability . If he decides to load the gun he then fires it or not with probability and after that moves to the next gun anyway.

Initially, each gun had been loaded independently with probability . Show that if after each move this distribution is preserved, then . Calculate the expected value and variance Var of the number of loaded guns under this distribution.

[Hint: it may be helpful to represent as a sum of random variables taking values 0 and 1.]

2.II.12F

A taxi travels between four villages, , situated at the corners of a rectangle. The four roads connecting the villages follow the sides of the rectangle; the distance from to and to is 5 miles and from to and to miles. After delivering a customer the taxi waits until the next call then goes to pick up the new customer and takes him to his destination. The calls may come from any of the villages with probability and each customer goes to any other village with probability . Naturally, when travelling between a pair of adjacent corners of the rectangle, the taxi takes the straight route, otherwise (when it travels from to or to or vice versa) it does not matter. Distances within a given village are negligible. Let be the distance travelled to pick up and deliver a single customer. Find the probabilitites that takes each of its possible values. Find the expected value and the variance Var .